Edge computing refers to a distributed computing paradigm where computation is performed near the source of data generation rather than relying solely on a centralized cloud-based server. In traditional computing models, data is sent to a centralized cloud server for processing and analysis. However, in edge computing, processing occurs closer to the data source, typically at or near the "edge" of the network.

Key characteristics of edge computing include:

-

Low Latency: By processing data closer to where it is generated, edge computing reduces the time it takes for data to travel, resulting in lower latency. This is crucial for applications where real-time or near-real-time processing is essential, such as in the Internet of Things (IoT), autonomous vehicles, and augmented reality.

-

Bandwidth Efficiency: Edge computing can help reduce the amount of data that needs to be transmitted to the cloud, optimizing bandwidth usage. This is particularly beneficial in scenarios where transmitting large volumes of data to a central server is impractical or costly.

-

Privacy and Security: Some applications require sensitive data to be processed locally rather than being transmitted to a central server. Edge computing provides a way to handle sensitive information locally, enhancing privacy and security.

-

Scalability: Edge computing allows for distributed processing, making it easier to scale computing resources horizontally by deploying additional edge devices. This scalability is valuable in dynamic and rapidly changing environments.

-

Redundancy and Reliability: Edge computing can improve system reliability by distributing processing across multiple edge devices. This can enhance fault tolerance and reduce the impact of failures in individual components.

-

Real-time Decision-Making: Edge computing enables real-time decision-making at the source of data generation. This is particularly important in applications where quick responses are critical, such as industrial automation and control systems.

Common use cases for edge computing include smart cities, industrial automation, healthcare, retail, and IoT applications. Edge computing is not intended to replace cloud computing but rather complements it, forming a hybrid architecture that combines the strengths of both centralized cloud resources and distributed edge devices.

Let's explore a couple of examples to illustrate the concept of edge computing and how it differs from traditional centralized computing.

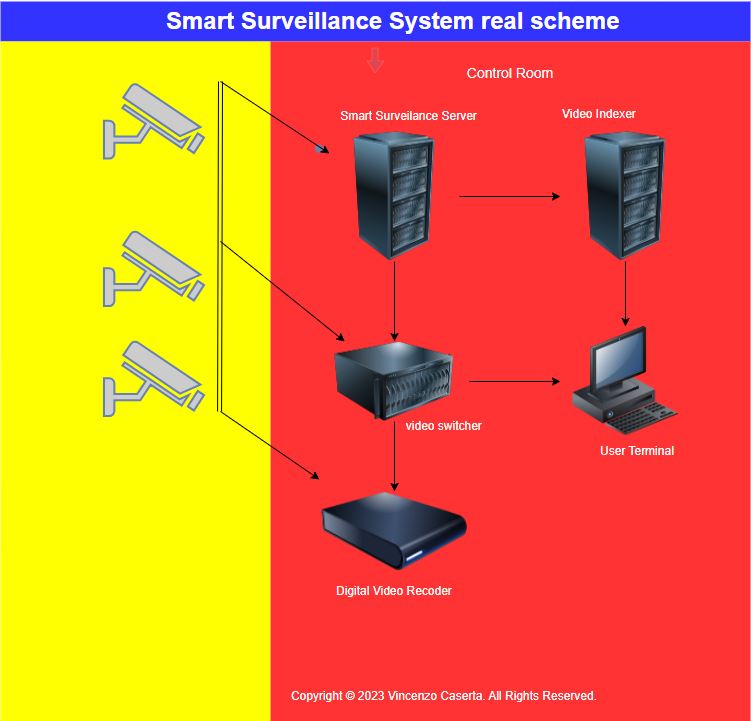

Example 1: Smart Surveillance System

Traditional Centralized Computing: In a traditional surveillance system, video feeds from cameras are sent to a central server for processing and analysis. The server handles tasks like object detection, facial recognition, and alert generation. This setup has the following drawbacks:

- High Latency: Delays in processing can impact the system's ability to respond quickly to security events.

- Bandwidth Issues: Continuous streaming of video data to the central server consumes a significant amount of bandwidth.

Edge Computing: In an edge computing-based surveillance system, cameras are equipped with onboard processing capabilities. Each camera can perform local analysis, such as identifying suspicious activities or individuals, without sending the entire video stream to a central server. Only relevant information or alerts are sent to the central server. This setup offers the following advantages:

- Low Latency: Immediate local processing allows for real-time response to security events.

- Bandwidth Efficiency: Transmitting only important information reduces the burden on the network.

Example 2: Industrial IoT (Internet of Things)

Traditional Centralized Computing: In a factory with IoT devices, sensors collect data on machine performance, temperature, and other metrics. This data is typically sent to a centralized cloud server for analysis. Drawbacks include:

- Latency: Depending on the volume of data and network conditions, there may be delays in receiving insights from the data.

- Reliability Concerns: If the connection to the cloud is lost, real-time monitoring and control become challenging.

Edge Computing: In an edge computing setup, edge devices are deployed throughout the factory floor. These devices process and analyze the sensor data locally. Critical decisions, such as adjusting machine settings in response to anomalies, can be made without relying on a distant cloud server. This offers several advantages:

- Low Latency: Quick response times for critical decisions.

- Redundancy: If one edge device fails, others can still operate independently.

- Bandwidth Efficiency: Transmitting only relevant information to the cloud reduces bandwidth usage.

In both examples, edge computing brings processing capabilities closer to the data source, improving performance, reducing latency, and enhancing overall system efficiency. This distributed approach is particularly valuable in scenarios where real-time or near-real-time processing is crucial, such as in surveillance, industrial automation, and IoT applications.